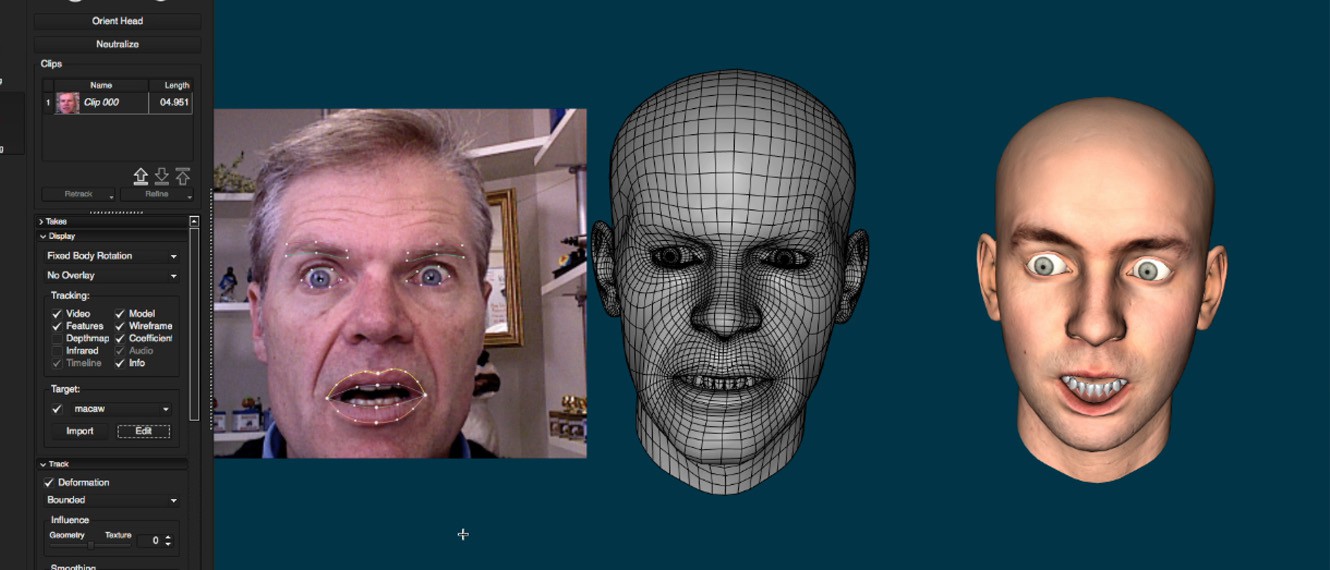

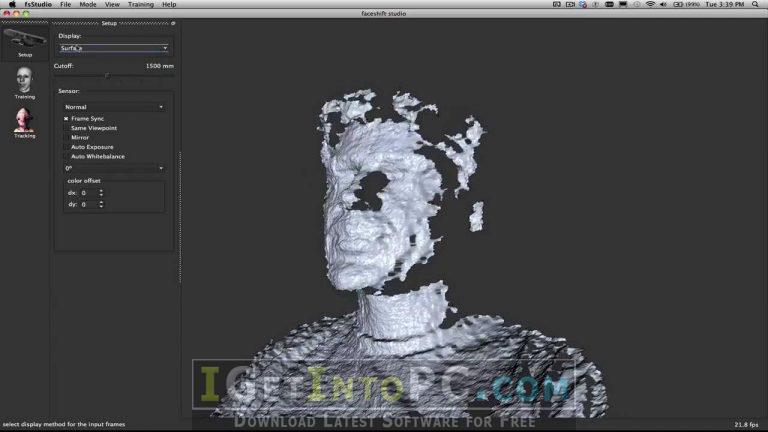

Faceshift’s professional product line therefore calibrates, though one can also simply scan based on a neutral expression, which will result in pretty good tracking results as is and they claim “already on par with the ILM method”. But for Faceshift’s CTO Dr Brian Amberg points out from their point of view “calibration free tracking is not as accurate as tracking with calibration, because the resulting muscle signal is much cleaner, there is less crosstalk between muscle detections and the range of the activations can also be determined”. The main difference between the newer research and the product Faceshift is the issue of calibration and vertex tracking (see below).įaceshift has published on Calibration Free Tracking (Siggraph 2014, Sofien Bouaziz). Today Li contributes to research in this area and is presenting several key papers at SIGGRAPH (see below). Actually, Hao Li, now at USC, was a researcher at ILM and helped develop an earlier system than the one Ruffalo used, and he was also one of the researchers in the genesis of Faceshift, working with the team before they formed the company. While the ILM system is not using Faceshift, they share some conceptual overlap. The idea of a digital mirror that mimics one’s face was used recently by the team at ILM with their nicknamed ‘monster mirror’, to allow Mark Ruffalo to see his performance in real time in his trailer as he prepared for the latest Avengers. Note, there is no sound in this video.įaceshift core technology goes back to the papers Face/Off: Live Facial Puppetry (Weise et al, 2009) and Realtime Performance-Based Facial Animation (Weise et al, 2011), these were both lead by Dr Thibaut Weise, today CEO of FaceShift. Watch a demo of Faceshift by the author, including the training phase with FACS-like poses and the real-time tracking stage with different animated characters. wp-content/uploads/2015/08/Faceshift_screen.mp4 There have been several versions of Faceshift, and this is an area with an active research community, with several recent SIGGRAPH papers, but Faceshift is one of the most popular commercial solutions on the market today. Coupled with markerless capture via depth sensing with inexpensive cameras, animators today can move from just a mirror to explore an expression at their desk to a 3D real-time digital puppet, inexpensively and with almost no lag.įaceshift is a product that allows realtime facial animation input or digital puppeteering via input from a number of different depth cameras onto a rigged face. This is conceptually simple and becoming available widely as a tool to help animators.

One can aim to map based on direct point correlation or via a system that relies on an intermediate blendshape and matching system, with tracking leading to similar blendshapes in source and target models.

Performance driven facial animation is an increasingly important tool.

0 kommentar(er)

0 kommentar(er)